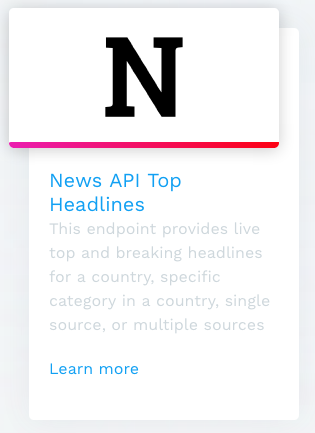

We wanted to break down the technical details of how we are profiling APIs for inclusion in the Streamdata.io API Gallery. We have developed a formal process for profiling API providers that we feel is pretty robust, and it is something we are looking to share and evolve out in the open as part of the wider API community. We consider it to be an open source process to profiling valuable API resources, and it is something we’d like to encourage API providers, and other API brokers to put to work for themselves–whether or not they participate as part of the Streamdata.io API Gallery.

We wanted to break down the technical details of how we are profiling APIs for inclusion in the Streamdata.io API Gallery. We have developed a formal process for profiling API providers that we feel is pretty robust, and it is something we are looking to share and evolve out in the open as part of the wider API community. We consider it to be an open source process to profiling valuable API resources, and it is something we’d like to encourage API providers, and other API brokers to put to work for themselves–whether or not they participate as part of the Streamdata.io API Gallery.

Github as a Base

Every new “entity” we are profiling starts with a GitHub repository and a README, within a single API Gallery organization for entities. Right now this is still something that is pretty manual, as we prefer having the human touch when it comes to qualifying and on-boarding each entity. What we consider an “entity” is flexible, and most smaller API providers have a single repository, while other larger ones are broken down by line of business, to help reduce API definitions and conversation around APIs down to the smallest possible unit we can. Every API service provider we are profiling gets its own Github repository, subdomain, set of definitions, and conversation around what is happening. Here are the base elements of what is contained within each Github repo.

– OpenAPI – A JSON or YAML definition for the surface area of an API, including the request, responses, and underlying schema using as part of both.

– Postman Collection – A JSON Postman Collection that has been exported from the Postman client after importing the complete OpenAPI definition, and full tested.

– APIs.json – A YAML definition of the surface area of an API’s operation, including home page, documentation, code samples, sign up and login, terms of service, OpenAPI, Postman Collection, and other relevant details that will matter to API consumers.

The objective is to establish a single repository that can be continuously updated, and integrated into other API discovery pipelines. The goal is to establish a complete (enough) index of the APIs using OpenAPI, make it available in a more runtime fashion using Postman, and then indexing the entire API operations, including the two machine readable definitions of the API(s) using APIs.json. Providing a single URL in which you can ingest and use to integrate with an API, and make sense of API resources at scale.

Everything Is OpenAPI Driven

Each APIs definition still involves using OpenAPI version 2.0, but it is something we are looking to shift towards version 3.0 as soon as we possibly can. Each entity will contain one or more OpenAPI definitions location in the underscore openapi folder, as well as individual, more granular OpenAPI definitions for each API path within the underscore listings folder. Each OpenAPI should contain complete details for the following areas:

– info – Provide as much information about the API.

– host – Provide a host, or variables describing host.

– basePath – Document the basePath for the API.

– schemes – Provide any schemes that the API uses.

– produces – Document which media types the API uses.

– paths – Detail the paths including methods, parameters, enums, responses, and tags.

– definitions – Provide schema definitions used in all requests and responses.

While it is tough to define what is complete when crafting an OpenAPI definition, every path should be represented, with as much detail about the surface area of requests and responses. It is the detail that really matters, ensuring all parameters and enums are present, and paths are properly tagged based upon the resources being made available. The more OpenAPI definitions you create, the more you get a feel for what is a “complete” OpenAPI definition. Something that becomes real once you create a Postman Collection for the API definition, and begin to actually try and use an API.

Validating With A Postman Collection

Once you have a complete OpenAPI definition defined, the next step is to validate the work using the Postman client. You simply import the OpenAPI into the client, and get to work validating that each API path actually works. To validate you will need to have an API key, and any other authentication details, and work your way through each path, validating that the OpenAPI is indeed complete. If there are missing sample responses, the Postman portion of this API discovery journey is where we find them. Grabbing validated responses, anonymizing them, and pasting them back into the OpenAPI definition. Once we have worked our way through every API path, and verified it works, we export a final Postman Collection for the API we are profiling.

Indexing API Operations With APIs.json

At this point in the process we upload the OpenAPI, and Postman Collection to the GitHub repository for the API entity that is being targeted. Then we get to work on an APIs.json for the API operations. This portion of the process is about documenting the OpenAPI and Postman that was just created, but then also doing the hard work of identifying everything else an API provider offers to support their operations–the essential items that will be needed to get up and running with API integration. Here are a handful of the essential building blocks we are currently documenting:

– Website – The primary website for an entity owning the API.

– Portal – The URL to the developer portal for an API.

– Documentation – The direct link to the API documentation.

– OpenAPI – The link to the OpenAPI we’ve created on GitHub.

– Postman – The link to the Postman Collection we created on GitHub.

– Sign Up – Where do you sign up for an API.

– Pricing – A link to the plans, tiers, and pricing for an API.

– Terms of Service – A URL to the terms of service for an API.

– Twitter – The Twitter account for the API provider — ideally, API specific.

– Github – The GitHub account or organization for the API provider.

This list represents the essential things we are looking for. We have identified almost 200 other building blocks we document as part of API operations ranging from SDKs to training videos. The presence of these building blocks tell us a lot about the motivations behind an API platform, and send a wealth of signals that can be tuned into by humans, or programmatically to understand the viability and health of an API. We are prioritizing these ten building blocks, but will be incorporating many of the others into our profiling and ranking in the future.

Continuously Integratable API Definition

Now we should have the entire surface area of an API, and it’s operations defined in a machine readable format. The APIs.json, OpenAPI, and Postman can be used to on-board new developers, and used by API service providers looking to provides valuable services to the space. Ideally, each API provider is involved in this process, and once we have a repository setup, we will be reaching out to each provider as part of the API profiling process. Even when API providers are involved, it doesn’t always mean you get a better quality API definition. In our experience API providers often don’t care about the detail as much as some consumers and API service providers will. In the end, we think this is all going to be a community effort, relying on many actors to be involved along the way.

Self-Contained Conversations About Each API

With each entity being profiled living in its own GitHub repository, it opens up the opportunity to keep API conversation localized to each entities GitHub repository. We are working to establish any work in progress, and conversations around each API’s definitions within the GitHub issues for the repository. There will be ad-hoc conversations and issues submitted, but we will be also adding path specific threads that are meant to be an ongoing thread dedicated to profiling each path. We wll be incorporating these threads into tooling and other services we are working with, encouraging a centralized, but potentially also distributed conversation around each unique API path, and its evolution. Keeping everything discoverable as part of an API’s definition, and publicly available so anyone can join in.

Making Profiling A Community Effort

We are this process over and over for each entity we are profiling. Each entity lives in its own Github repository, with the definition and conversation localized. Ideally API providers are joining in on the work, as well as API service providers, and motivated API consumers. We have a lot of energy to be profiling APIs, and can cover some serious ground when we are in the right mode, but ultimately there is more work here than any single person or team could ever accomplish. We are looking to standardize the process, and distribute the crowdsourcing of this across many different Github repositories, and involve many different API service providers. We are already working with to ensure the most meaningful APIs are being profiled, and maintained. Highlighting another important aspect of all of this, and the challenges to keep API definitions evolving, soliciting many contributions, while keeping them certified, rated, and available for production usage.

Ranking APIs Using Their Definitions

Next, we are ranking APIs based upon a number of criteria. It is a ranking system developed as part of API Evangelist operations over the last five years, and is something we looking to continue to refine as part of the Streamdata.io API Gallery profiling effort. Using the API definitions, we are looking to rate each API based upon a number of elements that exist within three main buckets:

– API Provider – Elements within the control of the API provider like the design of their API, sharing a road map, providing a status dashboard, being active on Twitter and GitHub, and other signals you see from healthy API providers.

– API Community – Elements within the control of the API community, and not always controlled by the API provider themselves. Things like forum activity, Twitter responses, retweets, and sentiment, and number of stars, forks, and overall GitHub activity.

– API Analyst – The last area is getting the involvement of API analysts, and pundits, and having them provide feedback on APIs, and get involved in the process. Tracking on which APIs are being talked about by analysts, and storytellers in the space.

Most of this ranking can be automated through there being a complete APIs.json and OpenAPI definition, and machine readable sources of information present in those indexes. Signals we can tap into using blog RSS, Twitter API, GitHub API, Stack Exchange API, and others. However some it will be manual, as well as require the involvement of API service providers. Helping us add to our own ranking information, but also bringing their own ranking systems, and algorithms to the table. In the end, we are hoping the activity around each APIs GitHub repository, also becomes its own signal, and consumers in the community can tune into, weight, or exclude based upon the signals that matter most to them.

Streamdata.io StreamRank

Building on the API Evangelist ranking system we are looking to determine that activity surrounding an API, and how much opportunity exists to turn an API event a real time stream, as well as identify the meaningful events that are occurring. Using the OpenAPI definitions generated for each API being profiled, we begin profiling for Stream Rank by defining the following:

– Download Size – What is the API response download size when we poll an API?

– Download Time – What is the API response download time when we poll an API?

– Did It Change – Did the response change from the previous response when we poll an API?

We are polling an API for at least 24-48 hours, and up to a week or two if possible. We are looking to understand how large API responses are, and how often they change–taking notice of daily or weekly trends in activity along the way. The result is a set of scores that help us identify:

– Bandwidth Savings – How much bandwidth can be saved?

– Processor Savings – How much processor time can be saved?

– Real Time Savings – How real time an API resource is, which amplifies the savings?

All of which go into what we are calling a StreamRank, which ultimately helps us understand the real time opportunity around an API. When you have data on how often an API changes, and then you combine this with the OpenAPI definition, you have a pretty good map for how valuable a set of resources is based upon the ranking of the overall API provider, but also the value that lies within each endpoints definition. Providing us with another pretty compelling dimension to the overall ranking for each of the APIs we are profiling.

APIMetrics CASC Score

Next we have partnered with APIMetrics to bring in their CASC Scoring when it makes sense. They have been monitoring many of the top API providers, and applying their CASC score to the overall APIs availability. Eventually, we’d like to have every public API actively monitored from many different regions, and posses active, as well as historical data that can be used to help rank each API provider. Like the other work here, this type of monitoring isn’t easy, or cheap, so it will take some prioritization, and investment to properly bring in APIMetrics, as well as other API service providers to help us rank all the APIs available in the gallery.

Event-Driven Opportunity With AsyncAPI

Something we have also just begun doing, is adding a fourth definition to the stack when it makes sense. If any of these APIs possess a real time, event-driven, or message oriented API, we want to make sure it is profiled using the AsyncAPI specification. The specification is just getting going, but we are eager to help push forward the conversation as part of our work on the Streamdata.io API Gallery. The format is a great sister specification for OpenAPI, and something we feel is relevant for any API that we profile with a high enough Stream Rank. Once an API possesses a certain level of activity, and has a certain level of richness regarding parameters and the request surface area, the makings for an event-driven API are there–the provider just hasn’t realized it. The map for the event-driven exists within the OpenAPI parameter enums, and tagging for each API path, all you need is the required webhook or streaming layer to realize an existing APIs event-driven potential–this is where Streamdata.io services comes into the picture.

One Gallery, Many Discovery Opportunities

The goal with all of this work is to turn the Streamdata.io API Gallery active community, where anyone can contribute, as well as fork and integrate API definitions and topical streams into their existing pipelines. All of this will take time, and a considerable amount of investment, but it is something we’ve been working on for a while, and will continue to prioritize in 2018 and beyond. We are seeing the API discovery conversation reaching a critical mass thanks to the growth in the number of APIs, as well as adoption of OpenAPI and Postman in the industry. Making API discovery a more urgent problem, as the number of available APIs increases, as well as the number of APIs we depend on each day to operate the APIs across our companies, organizations, institutions, and government agencies. Our customers are needing a machine readable way to discovery and integrate valuable internal, partner, and public resources into their existing data and software development pipeline–the Streamdata.io API Gallery is looking to be this solution.