An API contains live data, as new content is added and updated when calls are made. However, unless you poll the API regularly, you might not see how live that data can be. How can you visualize what’s changed, to give the user a real-time view of what’s going on? Furthermore, how can you do it efficiently, without wasting precious bandwidth?

To answer those questions, the Restlet and Streamdata.io teams thought about making a fun demo explaining how you can stream API changes live, in the browser. And speaking of “questions” and “answers,” what about making a little live quiz application, where respondents can vote in real-time? Since we’re API fanatics, we decided to make a quiz about HTTP Status Codes!

Not everybody enjoys HTTP Status Codes quizzes, so we wanted to enable non-developers to be able to input their own quiz via Google Sheets.

For the impatient, you can already head over to the HTTP Status Code Quiz, before diving into the nitty-gritty details!

In this article, we’re going to show you some cool features of both Restlet’s APISpark API platform, as well as of Streamdata.io’s powerful API streaming capabilities.

Let’s get started!

Modeling your data within APISpark & Google sheets

First of all, we’ll need to design a little data model for our application, which will result in a Web API. We’ve got three different concepts in our quiz: the questions and their possible answers, the right answers, and the votes for each question and answers.

Instead of storing everything in APISpark’s own built-in entity store, we’re going to split things up: the votes in the entity store, but the questions and answers in a Google Sheets wrapper. Why that? Actually, there are two reasons: 1) first, a Google Sheets is way more user-friendly for entering questions and answers, as you’ve got the UI of a familiar spreadsheet with its tables, columns and rows. 2) Secondly, as Google imposes limits in terms of polling on their spreadsheet API, we can’t let hundreds of voters hammer Google Sheets without Google thinking we’re doing some kind of bot attack!

We’ve gone with a pretty simple model.

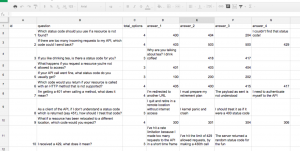

Our first sheet contains rows of questions with an ID, the question itself, the number of possible answers, and the possible answers. The second sheet, I’m not going to show you! Because it contains the answers, so I don’t want you to cheat! But basically, the table contains the id of the question and the id of the right answer.

We’ve got a spreadsheet UI ready, for entering our questions and answers, without requiring any particular technical skills for the person who’s going to be responsible for entering that data.

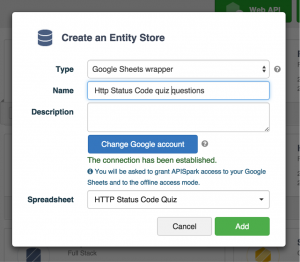

Next, we are going to import this spreadsheet in an APISpark Google Sheets wrapper.

Once you’ve created an account on APISpark (just click sign in and select a social login provider or create a dedicated account), you’re greeted with our quick start wizards, guiding you through the creation of your first store and API. If you’ve already got an account, from the dashboard, you can select to create a new “Entity Store,” where you’ll be able to select a Google Sheets wrapper:

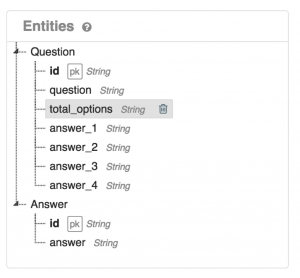

Once you’ve selected your Google account and your spreadsheet, you’ll be redirected to the newly created datastore. You will see in the overview, in the left pane entitled “Entities,” the structure of your data model:

Coming from a spreadsheet, the data is obviously tabular, but with a full-stack entity store, we could also have much richer models, thanks to lists and composite values, but here, we favored the spreadsheet UI to simplify authoring the quiz by non-technical persons.

Our Google Sheets wrapper is almost ready, we just need to deploy it (thanks to the “Deploy” button in the top right-hand corner) to make the data live!

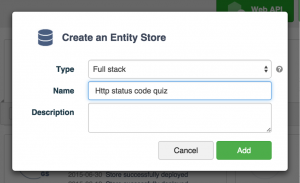

After taking care of the questions and answers, let’s create a “full-stack” entity store to contain the voting data:

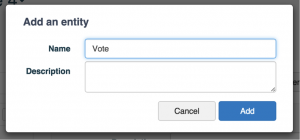

Once in the overview of entity store you’ve created, you’ll be able to create a new “Vote” Entity to store the results of users’ voting:

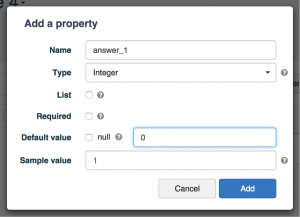

By clicking on the little plus signs on the Vote entity, in the Entities pane, you’ll be able to add properties to that entity. We’ll create 4 properties for the (up to) 4 possible answers to our questions:

We’ll deploy this entity store too, by clicking the “Deploy” button in the top right hand corner.

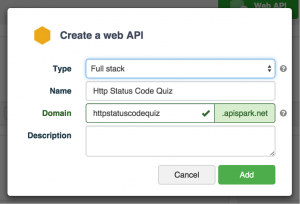

Now, let’s import both data stores within a single Web API! For that, we need that Web API. Let’s create it.

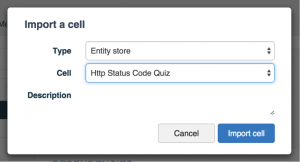

Once created, go to the Settings tab, and click on “Add” in the “Imports” panel on the left side, so as to import your two data stores: the Google Sheets with our questions and answers, and the Entity Store for the collection of the vote:

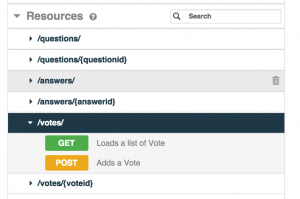

When both data stores are imported, if you go back to the “Overview” pane of your API, you can see our resources available:

One last click on the “Deploy” button to make your API live! And now, it’s Streamdata.io’s turn to transform this API into a lively one, showing votes evolving in real-time!

Turning an APISpark API into a live data stream

Once we have defined our quiz APIs thanks to APISpark, we wanted to build a simple web application that let people answer the great Status Code Quiz. Basically, we wanted a (responsive) web application that:

- let the user answer the quiz

- display the results of the quiz

At the same time, to give a real-time flavor to the demo, we wished to display:

- for each question: a graph updated in (near) real-time showing the total of votes

- for the results page: graphs that display the total votes for each answer in (near) real-time

Something like that:

and that:

Just imagine the bars are updated in real-time along with people answering the quiz.

To make a simple quiz app that rocks in real-time, we have chosen few technologies:

- a javascript graph library: we chose d3.js which is quite famous. We also had seen a nice demo based on this library and tweets at Best of Web 2015 performed by Raphaël Luta (@raphaelluta). The demo used tweets to update some graphs in real-time. Really nice. Hopefully, the video will be published soon…

- a nice javascript framework. We used AngularJS, which enables us to create SPA applications with a simple MVC/MVVM architecture. We had some experiments in writing demos with AngularJS: the property bindings is a nice feature that fits well with real-time updates of javascript properties. But AngularJS is not the only framework with this feature. Many other frameworks do have it, like EmberJS, etc. (We have used the version 1.x of AngularJS but porting the demo to the next version (which is in beta) would be a nice way to learn the new version…)

- for the responsive part, we were also curious to use some Material Design elements introduced by Google. So, we decided to use Angular Material, an implementation for AngularJS of the UX Google specification.

- last but not least, a nice service that streams APIs: streamdata.io! streamdata.io is a proxy that polls any JSON API, pushes the original dataset and then pushes only the changes from the original dataset. This is done in with the JSON-Patch format (RFC-6902). Using streamdata.io to push data when there are only changes instead of polling often enables us to relief the JSON API server (see How it works). streamdata.io has also a cache for the changes as nice feature.

The code source of the app is available on GitHub.

So, we won’t describe the entirety of the code but some parts we’ve found important when implementing such an application with AngularJS, APISpark and streamdata.io or some “best practices” we are used to.

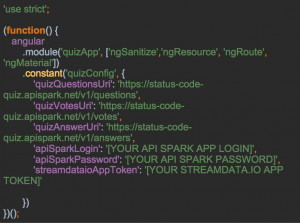

First of all, we define an AngularJS “constant” object where we put configuration data of the app. For this demo, this configuration object defines the different URIs of the quiz API, the APISpark tokens and the streamdata.io token. This enables to centralize some settings and ease the refactoring (for instance, changing only in one place the tokens declaration):

To obtain your own streamdata.io token, you can create an account here. Your token will be displayed in the MyApp/Settings/Security. Don’t worry it is free up to one million messages per month! Once created, you can stream any JSON RESTful API and test is if a simple curl:

curl -v “http://streamdata.motwin.net/http://mysite.com/myJsonRestService?X-SD-Token=[YOURTOKEN]”

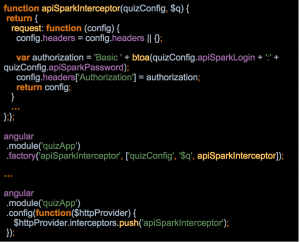

To get the list of the questions and the answers, we call straight the quiz API served by APISpark. Every call to the REST API needs to pass your APISpark credentials. In the philosophy of the DRY principle, we decided to implement an AngularJS interceptor that plugs into the $resource service of AngularJS that performs the REST calls. Basically, the interceptor will add the Authorization header required by the quiz API with our APISpark credentials for this API to every REST call performed by the HTTP service $resource. In this way, we don’t have to think to build and pass the APISpark header to the $resource every time we call this service.

To display the graphs of votes that change in real-time, we stream the quiz Vote API thanks to streamdata.io. To do so, we use the streamdata.io Javascript SDK. The SDK has a fairly simple API that enables us to react with different events: the main ones being able to receive the original dataset and then to receive only the changes of the dataset in the format of JSON-Patch.

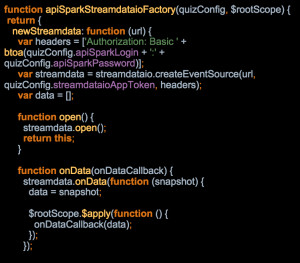

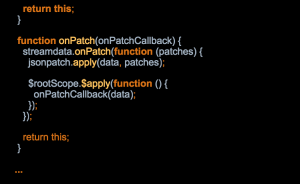

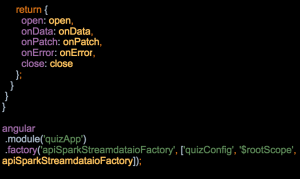

We could have called the SDK API directly in the different controllers of our application. Since we call the quiz Vote API, we also need to pass the APISpark credentials of the quiz API in the headers. So, to hide this technical implementation to our controllers and in the same spirit of what has been done for the interceptor, we have defined a factory that wraps the streamdata.io SDK. This factory returns a new wrapper of the SDK API with APISpark headers already pre-configured.

Note that we also encapsulate the call to a JSON-Patch library that enables us to apply JSON-Patch operations to the dataset (in our case, the list of votes). Thus, the controller callback that is in charge of dealing with the vote updates will only deal with the votes structure. From the controllers’ points of view, they just deal with the same data structure (the list of vote) as they do when they receive the initial set of data. Their code is thus simpler.

Once again, it’s not mandatory to use such a factory, but we feel doing that avoids polluting the controllers with headers, token and JSON-patch boilerplate…

Now, it’s fairly simple to convert our Vote API into a streaming API and add callbacks to get the initial dataset and then the patches. In the initialization of our controllers, we have just to declare:

createChart and updateChart are just callbacks that deal with the same data structure: the list of votes from the APISpark Vote API. Their purpose is to convert this data structure into the one understood by our charts API. So, not much work. And thanks to AngularJS properties binding, we bind this data structure to an AngularJS directive that wraps D3.js charts. Hence, the charts are updated in a straightforward way.

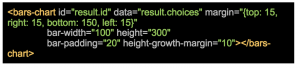

The last point is the integration of the charts. We did this with an AngularJS directive. An AngularJS directive allows you to declare HTML5 tags in your HTML code and wraps behaviors into AngularJS code. This is a good means to develop re-usable HTML components that can be used in different parts of the application. So we wrapped D3.js that draws bar charts in such a directive. That way, it’s simple to declare a bar chart in an HTML piece of code:

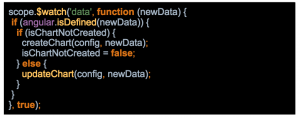

Property binding is performed with the AngularJS $watch function:

To be honest, the hardest part was to code the bar charts with D3.js. It’s a powerful graph library but the learning curve is quite steep… And while there are lots of examples of static charts, there are far less ones with dynamic behavior. In the beginning, we wanted the growth of a bar be displayed into green before fading into the color of the bar. Nice animation. But not so nice to code… We faced some issues with that and finally, we remained with bars that grow with the same color, which was quite simple thanks to property bindings of AngularJS. But I don’t give up implementing the original idea once I get a bit more experience on D3.js…

As a final word about the demo, the source code is available on Github here. The README describes how to run it once you have set up your APISpark quiz API and a streamdata.io app. Feel free to fork the code at will!

Conclusion

The ability to aggregate several data sources and expose them into one transparent API is a useful and powerful feature of APISpark. Giving a real-time flavor to an APISpark Web API was easy thanks to streamdata.io and took only a few seconds. As often, building the UI was the longest part! But it should be faster for your own source code if you master already your graphics library or if you’re only changing the DOM in your browser.

So, liberate your API: stream them with the winning combo of APISpark and streamdata.io!

This article was cross-posted on the Restlet blog