This guest post was authored by Glenn Gruber. Glenn is a Sr. Mobile Strategist at Propelics, an Anexinetcompany. He leads enterprise mobile strategy engagements to help companies determine the best way to integrate mobile into their business — both from a consumer-facing perspective, but also how to leverage mobile to empower employees to be more productive and improve service delivery through the intelligent use of mobile devices and contextual intelligence. Glenn has helped a wide range of enterprises on how to leverage mobile within their business including Bank of Montreal, Dubai Airports, Carnival Cruise Line and Merck. He is a leading voice in the travel sector as a contributing Node to Tnooz where he writes about how mobile and other emerging technologies are impacting the travel sector and a frequent speaker at industry events.

We’ve been building apps for the enterprise for a while now. We still have a long way to go in terms of filling out our portfolios and providing apps across different roles, but progress has been made. We know how to build, deploy and distribute apps to our employees and partners. And even more encouraging, from what I’ve seen empirically, is that we have begun to take on more and more complex business processes and tasks, unlocking greater productivity through the enterprise.

But there’s one element of mobile apps that is a bit perplexing.

Inarguably, the camera is the most used feature of the phone. Millions if not billions of pictures are taken and shared every day. The quality of the camera (and advances in computational photography) has become a defining and differentiating feature of the device. But you wouldn’t know that by looking at the apps we build.

A16z’s Benedict Evans made an amazing point in one of his recent talks. He said:

“The smartphone’s image sensor, in particular, is becoming a universal input, and a universal sensor. Talking about ‘cameras’ taking ‘photos’ misses the point here: the sensor can capture something that looks like the prints you got with a 35mm camera, but what else? Using a smartphone camera just to take and send photos is like printing out emails – you’re using a new tool to fit into old forms. Meanwhile, the emergence of machine-learning-based image recognition means that the image sensor can act as input in a more fundamental way – translation is now an imaging use case, for example, and so is maths. Here it’s the phone that’s looking at the image, not the user. Lots more things will turn out to be ‘camera’ use cases that aren’t obvious today: computers have always been able to read text, but they could never read images before.”

Let that sink in for a minute (tapping foot, while I wait).

Many of the apps that we have built use the camera to take a picture of something (e.g. safety hazard, a damaged shipment) but do nothing more than the digital equivalent of taking a Polaroid and paper clipping it to a file.

However, through this new lens (see what I did there) we have all sorts of new ways we could and should leverage the camera in our apps. The camera as an input device combined with Machine Learning and Augmented Reality open up a host of new possibilities. Think about that when you’re building your next app.

Let me share a few examples to get the juices flowing:

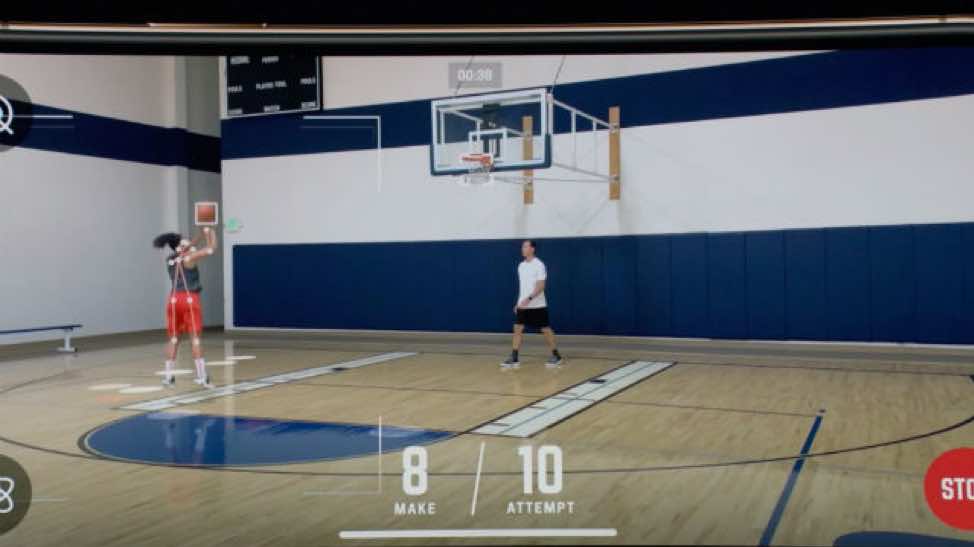

- Being a hoops fan, I thought that the HomeCourt demo at Apple’s September event was incredibly impressive…a real game changer (pun intended). Using both augmented reality and machine learning, the app uses the camera to analyze the shooter’s form and track the trajectory of the ball.

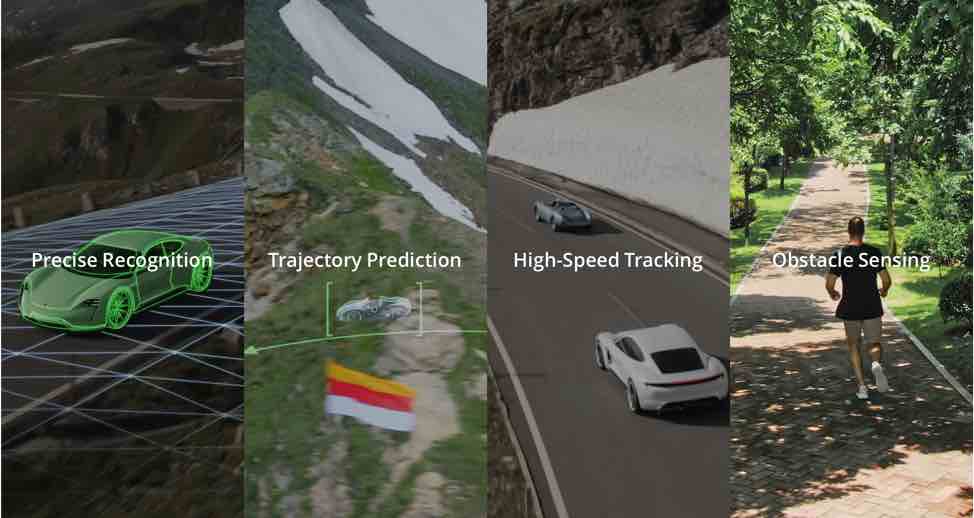

- Want another example of on-device machine learning? Let’s go out of the box and up say 100 feet for an example. My wife and I got a DJI Mavic Air Pro drone this year, mostly for use for her family travel blog, but there’s some really cool technology inside it powered by Machine Learning. With the ActiveTrack mode, you can select a target you want to follow – a person, a car, whatever – and it locks on to that object and follows it wherever they go, predicting trajectory if it loses line of site, and reacquires the target. And all the while it is using vision and sensor to avoid obstacles like trees and tension wires to keep up. Really pretty cool stuff. Now that might not be a smartphone application, but it hopefully makes you start to think differently about how you might use on device ML in conjunction with the camera.

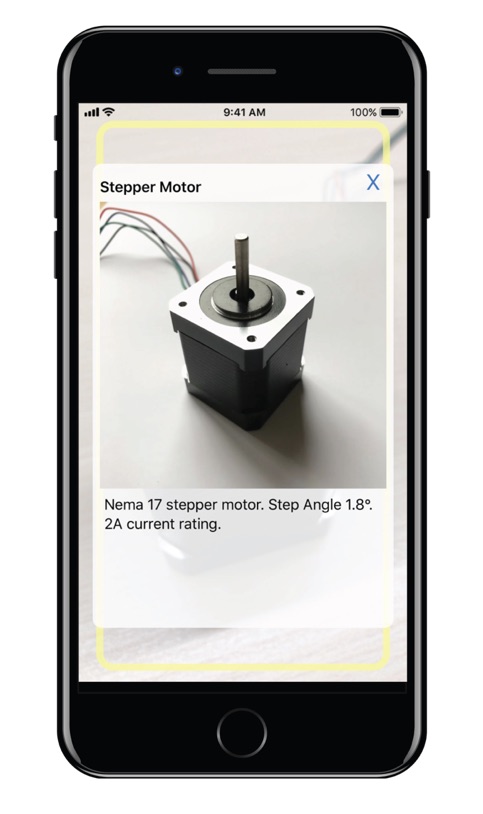

In one of Apple’s AR sessions, they talked about using machine learning/image recognition to train the device to identify discrete parts (NB: if anyone from Ikea is reading this, please, PLEASE, integrate this immediately so I know the difference between screw M and screw R).

But, let’s not just use this technology to build furniture. We should leverage AR capabilities in the devices which our employees already have or can serve more than a single purpose. Apple’s ARKit and Google’s ARCore have created an immense opportunity for us all to build impactful, deployable AR applications today. Both Apple and Google have abstracted away much of the hard work on building AR apps using the same tools mobile developers are comfortable working with. There are literally hundreds of millions of smartphone and tablets that out in the wild that can run AR today.

Think about how this can revolutionize field service, as just one example. Yes, there are certain applications that require hands-free use and smart glasses might be more appropriate. But, there are many more that don’t. Given the installed base that’s in the hands of our customer and employees, it borders on negligence to look long and hard at the smartphone to incubate AR projects.

Truly, there are kajillions of use cases we can imagine.

- An insurance agent…or better yet…a consumer points their phone at the damaged fender of their car and the app immediately provides a claim estimate, intiates the claim and suggest local repair shops (with directions).

- I recently heard of an app being piloted at Keller Williams, the world’s largest real estate franchise by agent count has more than 975 offices and 186,000 associates. The agent will now be able to walk through the house and the app will “look” at the house and identify key features and phrases to use in the listing to help highlight the unique qualities of the house, saving the agent a lot of time.

- A maintenance engineer goes to fix a piece of equipment. They point the camera at the equipment which then compares what it “sees” to the Digital Twin and helps identify the root cause of the malfunction and provides the technician with the steps to effect the repairs.

I’m sure there are many more potential ideas at your company. I encourage you to think about building apps that “see” and revisit some of your earlier apps and add this type of functionality.