Remember those old switchboard operators, manually connecting calls by plugging cables into the right sockets? Today’s enterprise integration faces similar challenges, but instead of phone lines, we’re connecting applications, data, and systems that all need to work together seamlessly.

The rise of digital business has given us powerful tools, but it’s also made our technology landscape more complex than ever.

Modern integration platforms like Axway’s Amplify Fusion aim to address these challenges.

The power of modern integration

Similarly to how telephone systems evolved from manual switchboards to automated exchanges, modern integration platforms provide tools to streamline the connection of different systems. Whether your applications run in the cloud or on-premises — or a hybrid of both — integration platforms offer a unified approach to building and managing these connections.

The Designer module in Amplify Fusion represents this evolution through its visual, low-code approach.

Instead of writing complex integration code from scratch, teams can use pre-built components to construct their integration flows similar to working with standardized building blocks rather than crafting custom solutions for every connection.

This enables both technical and business teams to collaborate on integration projects, each bringing their expertise to the table.

Modern integration architectures come with both opportunities and challenges, particularly when it comes to connecting systems using event streaming systems like Apache Kafka.

While Kafka excels at handling internal data streams and events, making this data accessible to external applications through APIs requires bridging some significant technical gaps.

See also: What is Apache Kafka? Understanding Enterprise Data Streaming

How to connect Kafka to the API world with Amplify Fusion

Kafka’s architecture is built on a custom binary protocol that operates over TCP/IP, specifically designed for high-throughput message streaming.

Making Kafka data accessible through APIs presents challenges due to their fundamentally different ways of operating. At its core, Kafka excels at continuously streaming data, carefully tracking each consumer’s progress and ensuring ordered message delivery.

APIs, on the other hand, offer two distinct approaches: traditional request-response patterns where consumers pull data on demand, and event-driven patterns that push updates to consumers as they occur.

Even when using event-driven APIs, which seem more aligned with Kafka’s nature, architectural challenges remain.

Kafka’s specific approach to handling data streams, managing consumer groups, and maintaining message history doesn’t directly translate to typical API patterns.

This mismatch requires consideration when designing solutions that maintain both security and reliability.

Amplify Fusion addresses these challenges by offering flexible ways to connect Kafka with your applications. For scenarios requiring real-time data, it maintains live connections that stream Kafka events directly to your applications, preserving Kafka’s native event-driven behavior.

When immediate updates aren’t necessary, you can fall back to traditional request-response patterns to fetch data at your convenience.

See also: Cloud application integration made simple with Amplify Fusion

This flexibility in connecting Kafka to external systems leads us to a crucial decision point: choosing the right API pattern for your specific needs.

As we’ll explore in the next section, the choice between event-driven and traditional API approaches significantly impacts how effectively you can leverage Kafka’s capabilities.

Each pattern serves different purposes in modern architectures, and understanding their strengths will help you build the most effective solution for your use case.

Selecting the right API pattern: event-driven vs traditional approaches

When connecting Kafka to external systems through Amplify Fusion, choosing the right API pattern is key to building an effective solution. The main distinction lies between event-driven APIs and traditional (non-event-driven) APIs, each serving different purposes in modern architectures.

See also: Event-driven APIs vs REST APIs [Definitions & Comparison]

Event-driven APIs excel at real-time communication, maintaining a continuous connection between systems. They work particularly well with Kafka’s natural event-streaming model.

Common examples of event-driven APIs:

| API Type | Transport | Key Features | Use Cases | Challenges |

| WebSocket API | WebSocket Protocol | Bidirectional communication, Persistent connection, Full-duplex communication | Chat applications, Live dashboards, Real-time gaming, Collaborative tools | Connection state management, Reconnection handling, Scaling complexity |

| Server-Sent Events (SSE) | HTTP/HTTPS | Server-to-client streaming, Auto-reconnection, Event typing | News feeds, Status updates, Metrics streaming | One-way communication only, Limited browser support for custom headers |

| gRPC with Streams | HTTP/2 with Protocol Buffers | Bidirectional streaming, Strong typing, Protocol buffers | Microservices, High-performance systems, Service mesh | HTTP/2 requirement, Complex implementation, Limited browser support |

| Event Webhooks | HTTP Callbacks | Push-based delivery, Asynchronous notifications, Custom endpoints | Integration patterns, Event notifications, System automation | Public endpoints needed, Retry logic required, Security concerns |

While event-driven APIs provide real-time capabilities crucial for dynamic applications, there are scenarios where traditional API approaches might be more suitable.

These non-event-driven APIs follow simpler request-response patterns and can be a better fit for less time-critical operations.

Common examples of traditional APIs:

| API Type | Transport | Key Features | Use Cases | Challenges |

| REST API | HTTP/HTTPS | Request-response pattern, CRUD operations, Kafka as backend store, Stateless communication | CRUD applications, Data synchronization, System integration, Mobile/web clients | Not real-time by default, Eventual consistency, Event ordering complexity |

| Polling API | HTTP/HTTPS | Regular interval requests, Batch processing, Stateless | Regular data sync, Non-critical updates, Basic monitoring | Higher latency, Server load with many clients, Unnecessary requests |

Dive deeper into different types of APIs with concrete examples.

How to implement multi-pattern Kafka integration with Amplify

Amplify Fusion’s Designer module enables you to build integrations using both event-driven and traditional API patterns, combined with its built-in Apache Kafka connector.

For event-driven patterns, you can set up real-time flows where data streams instantly from Kafka to your applications as changes occur. For traditional patterns, you can create flows where applications request Kafka data on-demand.

Whether your Kafka environment runs on premises, in Confluent Cloud, or any other cloud platform, you can build these integration flows to match your specific needs

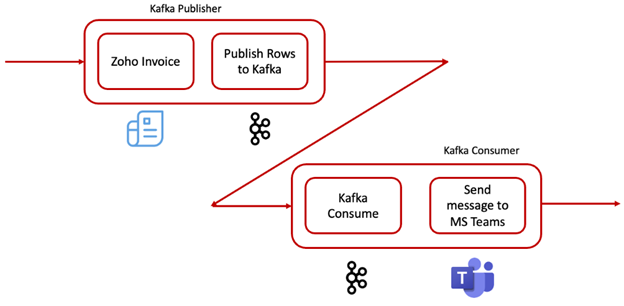

This lab demonstrates Amplify Fusion’s flexibility through a practical example. The integration flow consists of two main parts:

- A Kafka Publisher that uses a scheduled polling mechanism to query Zoho Invoice for changes. When it finds new or modified invoices, it publishes these updates to Kafka.

- A Kafka Consumer that works in an event-driven way, continuously listening for these messages from Kafka. As soon as it receives an update, it automatically processes the invoice data and sends notifications to stakeholders through Microsoft Teams via a webhook.

This entire data flow is illustrated below:

This shows how Amplify Fusion seamlessly bridges traditional API patterns with event-driven approaches, using Kafka to connect systems that communicate in different ways.

Benefits of using Amplify Fusion with Apache Kafka

The combination of Amplify Fusion and Apache Kafka provides organizations with operational advantages in their daily integration workflows. Through the platform’s interface, teams gain visibility into their Kafka message flows, allowing them to monitor and troubleshoot data streams in real time.

This visibility extends to detailed error logging, making it easier to identify and resolve issues that might arise during message processing.

Connecting different systems becomes straightforward with Amplify Fusion’s pre-built connectors.

Rather than spending time on complex coding, teams can quickly establish connections between Kafka and various applications through a visual interface.

This simplification doesn’t come at the cost of functionality: the platform still enables integration patterns and custom triggers that can automate responses to specific events or conditions in the data stream.

The ability to build and modify triggers directly within the platform transforms how organizations can react to their streaming data. Teams can set up automated actions based on message content, timing, or patterns, enabling proactive responses to business events.

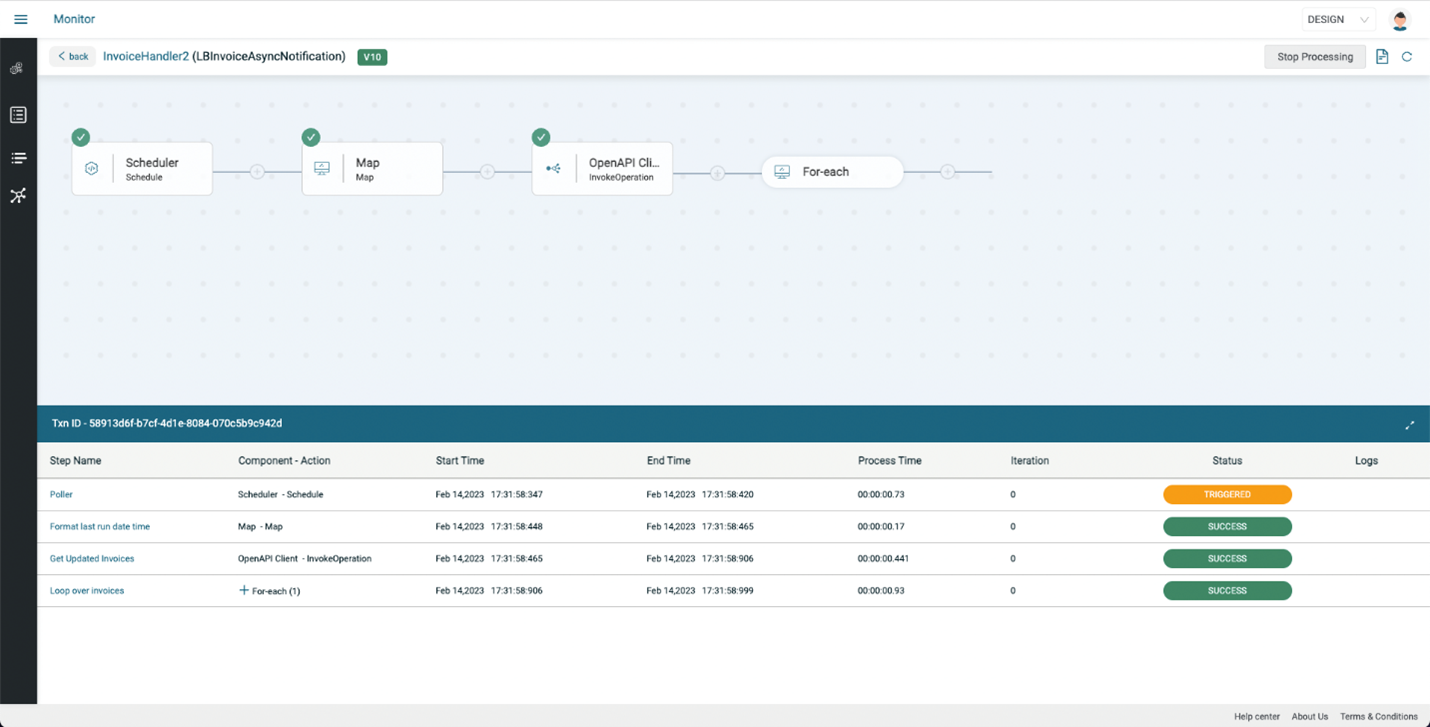

Here’s an example of a transaction overview:

When combined with the platform’s error logging and monitoring capabilities, this creates an environment where teams can not only track their data flows but also automate responses to both routine operations and exception scenarios.

Learn more about Amplify Fusion.